🔍 Robots.txt Generator – How to Use the Tool & Why It Matters

The robots.txt file is one of the key components of technical SEO. It gives clear instructions to search engine crawlers about which parts of your website they are allowed to access and which areas should remain private or excluded from indexing. A clean and properly configured robots.txt file ensures efficient crawling, protects sensitive folders, and improves overall site performance.

Robots.txt Generator – How It Works and Why You Should Use It

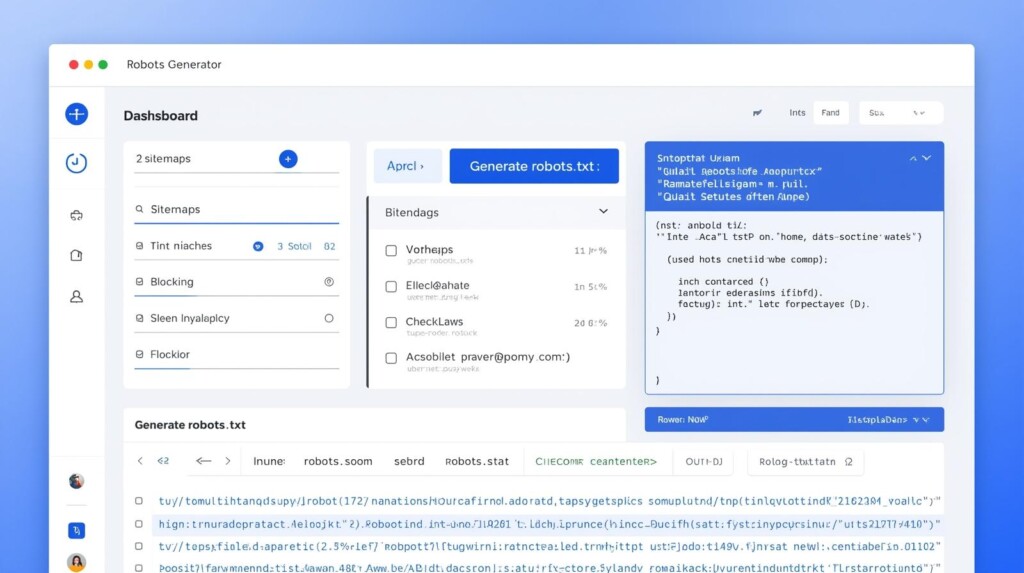

The Robots.txt Generator tool makes this process simple, even for beginners. Start by selecting your website type—general website, blog, e-commerce store, or WordPress. Each option applies recommended preset rules to give you a strong and optimized starting point.

Next, you can add one or more sitemap URLs. This helps Google and other search engines discover your content faster. The tool also lets you block specific bots such as AhrefsBot, SemrushBot, GPTBot, DotBot, and others. If you want full control, you can also add custom disallow rules for directories or pages you want to restrict.

Once your settings are ready, click Generate robots.txt and the tool instantly produces a complete file tailored to your site. You can copy it or download it as a real robots.txt file ready to upload to your root directory (example.com/robots.txt).

This tool is essential for anyone who wants better crawl efficiency, improved SEO hygiene, and full control over how search engines interact with their website—all without needing technical knowledge.